- Sat 25 August 2018

- Data Analysis, Machine Learning

- William Miller

- #data analysis, #Amazon reviews, #LDA Topic Modeling

Amazon.com and the Rise of Useless Reviews

How book reviews on Amazon have become less helpful

(Note: This project makes use of a random sample from a database of 8.9 million Amazon book reviews available at: http://jmcauley.ucsd.edu/data/amazon/)

Over the past decade, Amazon has come to dominate the online marketplace. In fact, in 2016 Amazon accounted for 43% of online purchases in 2016, and that percentage is only expected to increase over time (according to BusinessInsider.com and Statista.com. In 2017, 64% of U.S. households had an Amazon Prime membership (Forbes.com. As anyone who has shopped online has experienced, product reviews are a major benefit of online shopping, empowering consumers to leverage unprecedented amounts of information to make purchasing decisions. And as Amazon has grown, so has the number of product reviews it has available.

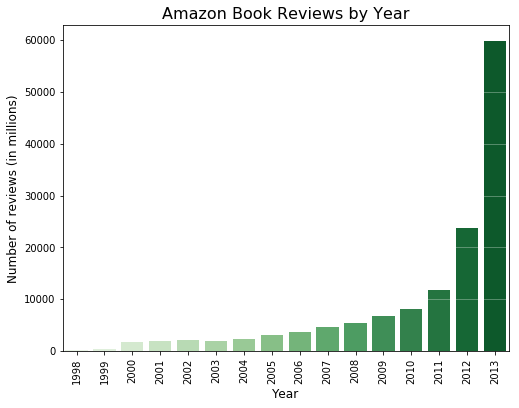

(Note: 2014 is omitted in the plot above because the sampled dataset only includes ratings and reviews through July of 2014.)

Drawing from a database of 8.9 million book reviews on Amazon, it is evident that there has been a dramatic increase in product reviews for books alone. At first glance, this would seem to be a very positive trend for consumers who read reviews before making online purchases, as there has never before been so much information available to inform a purchase. However, closer scrutiny reveals that this is not such a straightforward conclusion.

As anyone who has shopped on Amazon has likely seen, each review on Amazon has "helpful" and "not helpful" buttons underneath it. These provide feedback to Amazon, the reviewer, and other Amazon customers that is valuable when choosing which reviews, and is really the only means of guidance in choosing reviews out of hundreds, or sometimes even thousands, to consider trustworthy.

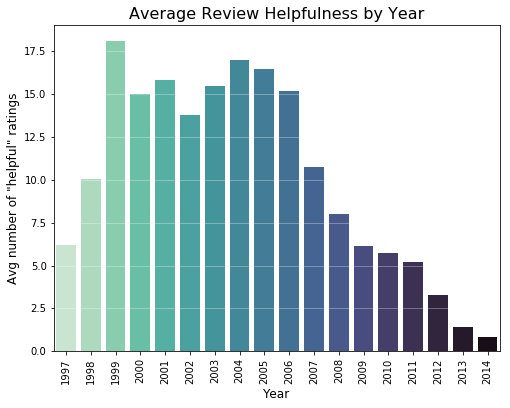

A quick look at the average "helpful" rating of a reviews on Amazon over time reveals an interesting trend in the average number of "helpful" ratings given to reviews.

As can be seen in the plot above, the average "helpful" rating of reviews declined dramatically from 2006 to 2007 and has continued to decline ever since. It is apparent that, while the number of book reviews on Amazon has grown very rapidly, a much lower percentage of people consider them helpful.

As the number of book reviews on Amazon has increased dramatically, the number that are rated helpful has not increased proportionately. Compared to the years 2000 to 2008, the years 2011 to 2014 have a much smaller percentage of reviews that have been rated helpful by even one person - even though far more reviews are posted in these years.

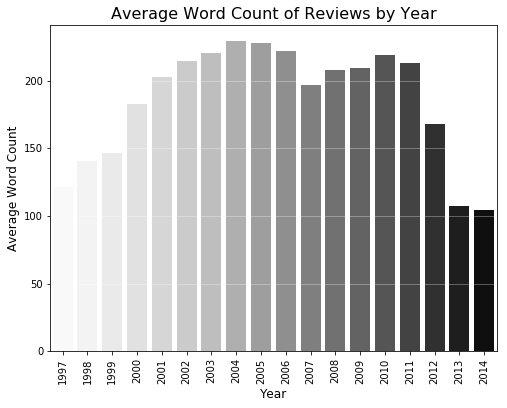

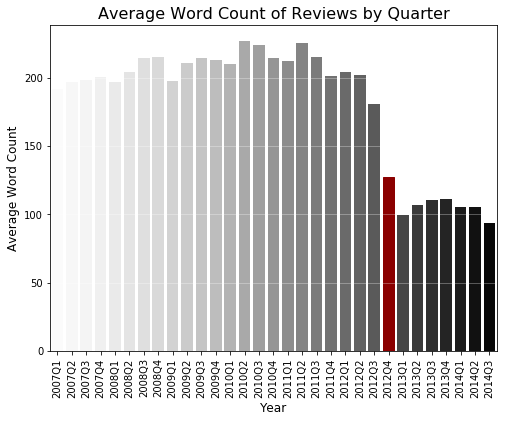

There are a number of possible causes for this, one of which is simply that people are not putting as much effort into writing reviews. One way to measure this is the look at the average length of reviews over time as can be seen in the chart below.

A very large drop-off in length for the average Amazon review can be observerd starting in 2012 and becoming more drastic in 2013 and 2014. This appears to correlate with the steepest drop-offs in the helpfulness of the average review, as well as the sharpest increases in number of book reviews on Amazon.

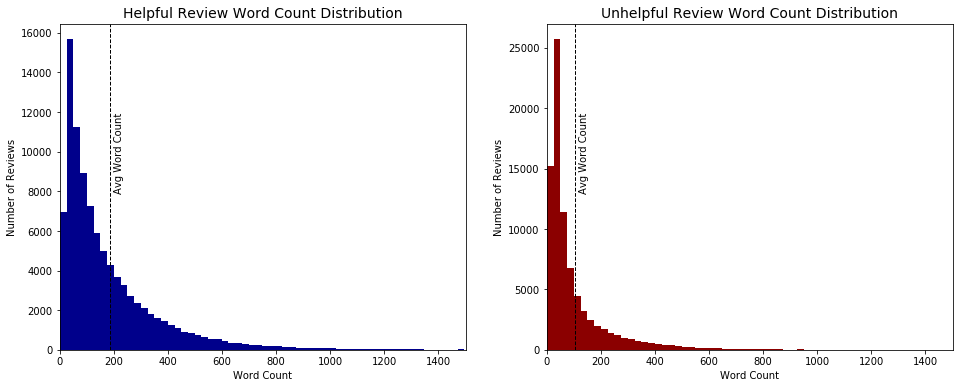

Average word count of reviews with at least 1 "helpful" rating = 185

Average word count of reviews with zero "helpful" ratings = 104

As the charts above show, longer reviews do tend to be rated as "helpful" more often. The plot on the left shows the distribution of word counts for reviews that have at least one helpful rating, with the average word count for these being 185. This word count is 80% higher than that of reviews that received no helpful ratings at all. The distribution of word counts for reviews with no helpful ratings can be seen in the plot on the right, which shows an average word count much lower than reviews that have been rated helpful.

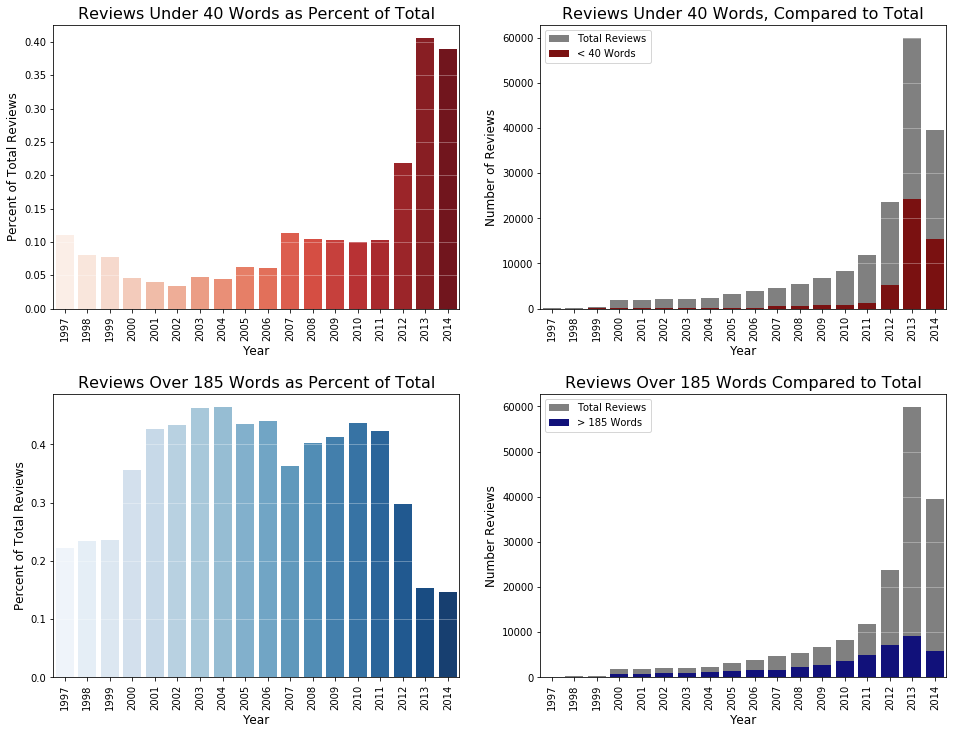

Looking into this further, a sharp decline in number of reviews consisting of more than 185 words is apparent, as is a drastic increase in reviews consisting of 40 words or less.

In 2011, more than 40% of book reviews on Amazon consisted of more than 185 words, and 11% of reviews consisted of fewer than 40 words. Within the span of 2 years, this comparison was turned on its head. In 2013, over 40% of reviews consisted of fewer than 40 words. Only 15% of reviews consisted of more than 185 words.

From all of the plots above, it seems evident that while Amazon reviews have become more numerous, they've also become much shorter, and are generally considered less helpful. 2012 seems to be a very pivotal year in this trend, as number of reviews under 40 words rose sharply in this year and reviews over 185 words fell. Some research yields a potential reason.

In 2012 Amazon began removing reviews that did not meet certain criteria, and it removed them in large numbers. A New York Times article described Amazon's policy as:

Giving rave reviews to family members is no longer acceptable. Neither is writers’ reviewing other writers. But showering five stars on a book you admittedly have not read is fine.

The blogosphere also lit up with commentary and investigation. Some reviewers who complained about their reviews received a form letter from Amazon stating:

I'm sorry for any previous concerns regarding your reviews on our site. We do not allow reviews on behalf of a person or company with a financial interest in the product or a directly competing product. This includes authors, artists, publishers, manufacturers, or third-party merchants selling the product.

We have removed your reviews as they are in violation of our guidelines. We will not be able to go into further detail about our research.

I understand that you are upset, and I regret that we have not been able to address your concerns to your satisfaction. However, we will not be able to offer any additional insight or action on this matter.

Many authors posted complaints on their blogs that their book reviews were disappearing. One such author, Joe Konrath, complained to Amazon, stating:

My reviews followed all of Amazon's guidelines, and had received hundreds of helpful votes. They informed customers, and they helped sell books. They represented a significant time investment on my part, and they were honest and accurate and fully disclosed my relationships with the author I reviewed if I happened to know them. And these reviews were deleted without warning or explanation.

An article at Forbes.com chronicled many more complaints by authors and other reviewers.

Amazon began implementing this policy in September of 2012. Looking again at the decline in average review length, viewing it by quarter instead of by year, shows that this policy affected average review length immediately and drastically.

It appears that when Amazon decided to remove reviews written by people who write as a profession, the average review length dropped immediately. Since longer reviews tend to be rated as helpful, fewer reviews were then considered helpful. The changes in policy simultaneously allowed the spamming of low-content reviews, so the number of reviews grew dramatically.

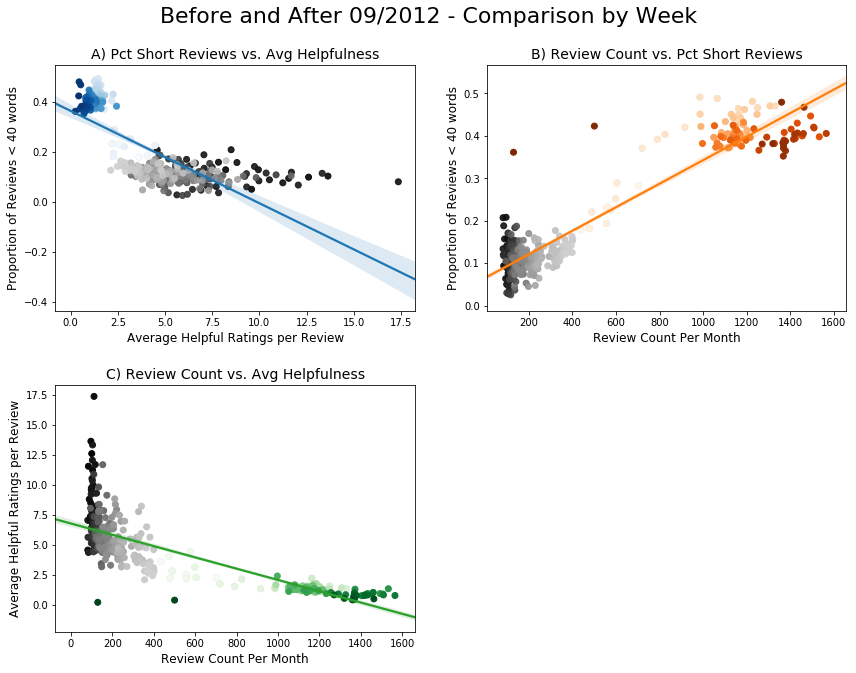

This gives us three metrics to compare for correlation - the number of reviews overall, the proportion of those that fewer than 40 words, and number of helpful ratings given per review. These will be compared on a monthly basis since 2011.

Plot A) R-squared value: 0.57, p-value: 0.00

Plot B) R-squared value: 0.89, p-value: 0.00

Plot C) R-squared value: 0.60, p-value: 0.00

In plot A above, the average number of helpful ratings per review fell as the percentage of reviews shorter than 40 words went up, with the greyscale cluster representing reviews written before Amazon's change in policy and blue representing those written after.

From plot B, it is evident that the number of reviews shorter than 30 words increased dramatically after the change in policy, with the greyscale cluster again representing reviews written before the policy change.

From plot C, using a similar color scheme, we can see that the average number of helpful ratings per review fell dramatically after Amazon's change in policy,

All of the plots above fall into roughly two groups, and the division between those groups can be clearly delineated between weeks before the beginning of September 2012, when Amazon implemented its new review policies, and those after.

The relationship between values in each of these plots is significant. The variance in reviews under 40 words predicts 57% of the variance in average helpful ratings per review. Review counts predict 89% of the variance in reviews under 40 words and 60% of the variance in average helpful ratings per review.

It is clear that there is a relationship between all of these factors, and that Amazon's change in policy strongly effected the nature of reviews on its site. However, since this is only one relatively small sample, I will replicate random samples of this data to ensure the results are accurate.

Plot A) The 95% confidence interview for R-squared replicates is 0.51, 0.65

Plot B) The 95% confidence interview for R-squared replicates is 0.86, 0.91

Plot C) The 95% confidence interview for R-squared replicates is 0.54, 0.67

Replicating the Amazon review data 10,000 times and calculating the R-squared and p-values for these replicates shows that the values obtained are well within a 95% confidence interval calculated through replication.

It can be definitively stated that there is a relationship between these three metrics, and that the changes in these three were caused by Amazon's change in policy.

But this still leaves the question of whether or not Amazon's changes in policy is better for consumers open, regardless of whether or not people are hitting the "helpful" button on reviews as often. One way to measure this is to look at how much information is contained within reviews before Amazon's policy change compared to afterwards. This can be done by performing an unsupervised LDA topic modeling on all the reviews overall, the reviews before, and the reviews after, then comparing this results. The results will be comprised of 2 metrics, as well as a plot which indicates the distribution of topics discovered by the LDA model. If the topics in the plot are closely clustered together, this would indicate that they have a high degree of similarity, and therefore convey less unique information than topics that are spread futher apart.

The two metrics provided by the LDA model are perplexity and coherence. Lower perplexity scores indicate that that the LDA model predicts a sample better than higher perplexity scores. In this instance the coherence score is the most useful metric, with higher coherence scores being better. A higher coherence score indicates that the set of words in each topic are related and meaningful, for instance the set {sport, ball, team, score} would have a high degree of coherence due to the words being both specific and related by topic, while {want, go, know, be} would have a low degree of coherence due to being general and not related by topic.

The number of topics to be predicted is set beforehand. In this instance, a variety of numbers of topics were tested, but for calculation speed and clarity, 15 topics ended up being a good number of topics with results that are consistent with other numbers tested.

The perplexity and coherence score of all of reviews are:

Perplexity: -7.79812496773

Coherence Score: 0.417174912863

These provide a valuable reference point when looking at reviews before and after the policy change.

The following are the scores for reviews written before Amazon's change in policy:

Perplexity: -7.89364854513

Coherence Score: 0.46122500792

It is clear that perplexity score is lower than that of all of the reviews considered as a whole, and that the coherence score is over 10% higher.

Finally, we have the scores for reviews written after the policy change.

Perplexity: -7.34654096094

Coherence Score: 0.357382024741

The perplexity score for these reviews is significantly higher than that of either of the other sets of reviews, indicating that it was not as possible to predict topics for a sample from these reviews as it was from either of the other sets. Moreover, the coherence score for the reviews written after the policy change was 12% lower than that of all reviews together, and was over 25% lower than that of reviews written before the change.

The conclusions drawn from analyzing the coherence and perplexity scores of the topic models can be seen more tangibly in the plots of their topics below. The topics in the top-most plot are for the reviews written before Amazon's policy change.

The plots are interactive, so all of the topics and most common words in them can be seen by hovering the cursor over them.

The topics in this plot above are fairly spread out, indicating well defined topics, and looking at the words contained in the topics, they tend to contain words that are closely related. While the largest topics on the map contain a larger number of less-related words, the topics gain a lot more definition after the first three.

Below is an interactive topic plot for reviews written after Amazon's change in review policy.

The topics in the lower plot are clustered much more closely together, which indicates that the topics are not well defined and may overlap significantly. The words in the largest topics tend to be less related than those in the topics before the policy change, and this extends out through the fifth most common topic. Much more overlap is apparent in this model even for the higher-numbered, smaller topics.

It is safe to conclude from all of the above that Amazon's reviews are both less helpful and less informative than they were before the policy change in 2012. It is evident that, if Amazon's goal was to maximize the helpfulness of reviews on their site, their policy change was a poor decision. Barring people from writing reviews who love writing enough to dedicate their lives to it seems counter-intuitive, and its negative effects can be objectively observed. Instead of the lengthier, more helpful reviews which predominated Amazon book reviews until September of 2012, consumers are now met with very short reviews which, apparently, very few of them find helpful.

Anyone who purchases on Amazon, whether for personal or professional reasons, would benefit from keeping the effects of this policy change in mind an adopt the following strategies as a result:

- More reviews is not necessarily better, even if they tend to be positive. Maintain a healthy suspicion of short reviews.

- Seek out longer reviews, they may take longer to read, but they will almost always contain more information.

- See out reviews that are helpful, and use the "helpful" button to rate reviews and guide others to those that are worthwhile.